what up done nerds i'm luke a data analyst and my channel is all about tech and skills for data science and in this video today i wanted to cover my pathway for becoming a data analyst if i had to start over again and for this i'm not going to be only sharing the skills that i recommend learning but also my process for learning different skills which i've applied and refined over my time in school learning engineering to my time in the navy learning how to drive a nuclear-powered submarine and then more recently to learning all the different skills of a data analyst in order to continue to gress further in my job this process has also been refined by my interactions from others that have not only gotten jobs as data analysts but also hired others for these roles as well my journey was filled with a lot of wasted time and effort and so i'm hoping that this video helps save you effort and also time and learning the skills you need to know for your job so let's break into my recipe for learning anything and it's an iterative two-step approach that i recommend taking that can be applied to anything that you really want to learn the process consists of learning it and then using it so let's expand further into what you should be learning as a data analyst and i feel that there are four general areas that you should be focusing on that consist of technical skills soft skills analytical skills and domain knowledge don't worry we'll go into all these general topic areas in a bit but we need to move to that next step of actually applying it immediately after learning it and using it is it true that if you don't use it you lose it is that a serious question which in this case is quite literally true because the tools that i've learned in the past and haven't applied i haven't been able to retain them so i feel like this is a really key important aspect in order to retain that skill and you can use these skills in a variety of different ways such as coursework projects on coursera portfolio projects for your resume work projects for your job and then also through teaching others now there's an added benefit of this second step and that's that because you've created something with your new skills you now have something to showcase to an employer as experience so when you're searching for a job you can now display this item that you created for employers to see i've rambled about this before but i think that online courses and certificates are great for this first step in the process of learning things but if you don't have experience to showcase on a resume on how you use these skills that you learned an employer is not going to risk hiring you and all of this relates directly to the sponsor of this video coursera coursera does a great job of hosting courses so that way you can learn the skills in that first step of the process but also in that second step of using something it then goes and has projects available for its specializations and certificates and this is great because you can not only display your certificate or specialization on a resume you can also showcase those projects as experience for employers to see so getting back to my learning process so once you have learned a skill and then used it it's then time to iterate back and learn a new skill so where do you actually start and what skill should you focus on first for learning a skill my preference is to start with those technical skills and then also incorporate those other skills such as analytical or domain knowledge while you're learning a technical skill so why do i say start on a technical skill first so one i feel like they're more tangible and they're easier to set goals that you can actually accomplish such as you can write out what functions you want to learn for excel and then learn it i also find that technical skills are funner to learn and i have higher motivation levels when actually setting out to accomplish that and two they allow you to apply other skills while actually focusing on that technical skill for example say you're learning a technical skill such as like r you could also write a blog post about it and this would showcase and build on your soft skill of writing so technically you're not only focusing on technical skills but you're also trying to incorporate those other skills as well alright so let's jump into my technical skill roadmap so i recently did a data analytics project where i went through and scraped job posting data from linkedin i was able to find the most important skills for entry-level data analysts based on how many times a skilled appeared in a job posting so my insights from this project were this that excel and sql are the most important skills to learn of a data analyst as they comprise almost half of all job postings following in popularity are the bi tools of tableau and power bi and then also the programming tools such as python or r so from this my recommended roadmap is this first i recommend getting a brief overview of all the different tools i think this is going to help with later on identifying tools that you want to focus on based on what your passion and interest is in i like the google data analytics certificate because it teaches you a lot about the popular tools of sql spreadsheets are in tableau and then going back to my recommendation on how to learn it not only teaches you about these skills but then you also implement these skills in a capstone project for the certificate now this first step in the process is all about breadth not depths and the google certificate is perfect for this because you're not going to be a master of any of these skills once you complete it but you will have a general overview of these tools and you also have an introduction to other skills as well such as soft skills and domain knowledge next it's time to get into a mastering skill and for this we need to focus on either excel or sql i recommend these two most popular tool of data analysts because from a probabilistic standpoint if you have these two skills on your resume i feel like you're more likely to get hired for an entry level data analyst job now regarding whether to use excel or sql first i really leave that up to you if you're looking for recommendations for resources to learn these type of skills check out this recent video i did where i went over some top courses to learn the skills of a data analyst next after mastering both excel and sql it's time to get into mastering other tools such as bi tools and programming languages once again when selecting one of these tools i'd go off what your passion is select one that you have an interest for and you really want to dive into and learn and apply other skills with i think it's important to understand that you don't have to master every single one of these skills here in order to land your first job as a data analyst my first job i landed with only the skills of excel but i continued to progress my skills and because of that i began and because of that i continued to advance in my career as a data analyst being able to level up and get different opportunities based on the skills i was learning so that's my roadmap for technical skills but what about those other areas of analytical skills domain knowledge and soft skills and what do i mean by these skills and how do i incorporate them while learning those technical skills let's break it down first up is analytical skills and by this i mean things like problem solving critical thinking research and then math skills so i get a lot of questions around this math skill whether more in-depth training or studies is needed prior to taking any courses or prior to diving into the field of data analytics part of my life as a data analyst i was fortunate enough to be exposed to a lot of different math subjects so everything from algebra to more advanced mathematics like calculus and differential equations because of this and now being my role of data analyst i can say that the most of the math that i've applied to my job has been pretty basic math and has focused on algebra probability and statistics i don't think subjects like calculus and discrete math are necessary especially for entry-level data analyst roles and the good news is that for most secondary schools like high schools in the united states you're exposed to subjects such as algebra and also other subjects like probability and statistics so based on this i wouldn't necessarily worry that you don't have the math skills to get started instead if you don't know something in math you can then learn it or apply it in a project as you're going along so getting back to how to apply analytical skills in a project when i was learning excel one of my portfolio projects that i was working on in school was building a food nutrition calculator this was a spreadsheet that could tell you what to eat in order to be healthy this project not only required learning excel it also applied probability and statistics in determining what foods to recommend along with basic algebra in calculating macronutrient values of food this project was not only great for teaching me the technical skill of excel but also testing my analytical skills in solving this problem of building this calculator interesting enough this project got brought up multiple times in different job interviews i were in specifically by interviewees that were interested in physical fitness and well-being and it was really great because it allowed me to connect on a similar interest with the interviewee next up is domain knowledge and this is knowledge of a specific discipline or field so for example i recently asked you all what fields you were transitioning from to become a data analyst and the results range from students and business and engineering to those working in an industry such as education and health care from what i found you don't have to actually switch industries or domains in order to become a data analyst in fact what i found is those that have the most success in becoming data analysts apply those newly learned data analytical skills in the current domain or industry that they're in as an example of this in my first role i was working in the procurement industry working only with the skill of excel at the time i was looking to improve my bi tools and an opportunity came up to build a solution using power bi as i had a general understanding of this field of procurement i was able to apply these newly learned skills of power bi in my role to build this dashboard but also i was able to actually go more in depth and learn even more about this field of procurement so for those that are working in an industry or maybe going to school to learn a certain subject i highly encourage you to take a similar approach and dive into a tool while also diving deeper into that domain so you can apply those skills in a relevant project last up is soft skills and this relates to how you work and also interact with other people with the current pandemic this shifted the way that we're interacting with each other and instead of doing the normal face-to-face interactions we've actually shifted this quite differently to using alternate forms of communication i actually think this is a positive in that you can actually showcase these alternate forms of communication in your portfolio and in the projects that you do so what do i mean by this well when i was learning tableau i decided to make a youtube series documenting my learnings these videos were not only improving my tableau skills but also a way for me to improve my soft skills of communication where i was getting first hand feedback on my presentation skills now i'm not saying that you have to make youtube videos per se instead what i'm saying is that you can use social media in order to showcase those soft skills that you have such as writing posts or tutorials on medium sharing your code or processes on github or making short form content on instagram or tick tock all these not only have the benefit of working on those technical and soft skills they also are able to be used and showcase your experience for employers to see how you interact with others all right so that's my roadmap on how i've learned to become a data analyst remember this is not a comprehensive plan so you don't need to learn every single skill that i showed here today instead i'd start small right so start with that one technical still and add in another skill maybe analytical or soft skill that you want to work on and build a project from there iterate remember for me one skill of excel was good enough to get my first job as an analyst and i feel the same can apply to you as well as always if you got value out of this video smash that like button and with that [Music] [Music] new video from ken on how to start in data science yes every year i like to refresh my advice about how i feel about learning data science the data domain is changing [Music] learn more about data science in the upcoming year thank you so much for watching and good luck on your data science journey wait a second we need a video like this but for data analysts what i've done nerds i'm luke a dad analyst and my channel

Monday, 13 June 2022

Sunday, 12 June 2022

6 Ways That Makes Data Analytics in The Hospitality Industry Useful

Excerpt copied from

for educational purposes

How Data Analytics in The Hospitality Industry Can be Helpful? (6 Tips Inside)

In recent years, we have seen more industries adopt data analytics as they realize how important it is. Even the hotel industry is not left behind in this.

This is because the hospitality industry is data-rich. And the key to maintaining a competitive advantage has come down to ‘how hotels manage and analyze this data’.

With the changes taking place in the hospitality industry, data analysis can help you gain meaningful insights that can redefine the way hotels conduct business.

Below are some of the ways through which data analytics in the hospitality industry can help hotels with business strategies.

Ways Data Analytics Can Help the Hotel Industry

The importance of data analytics in the hotel industry is necessary, since it serves millions of guests every day. Each of them has their own set of preferences, expectations, and needs for the journey.

But, how does it impact your hotel’s business? Let take a deeper dig at the use of data analytics in the hospitality industry.

1. Can assist in improving revenue management

Data analytics in the hospitality industry can help hoteliers to develop a strategy for managing revenue by using the data gathered from various sources like the information found on the internet.

Through analysis of these data, they can make predictions that will help owners with forecasting. They would learn about:

- Expectation in terms of demand for accommodation in the hotels

- The best price-value ratio for their guests

Revenue management professionals are in search of opportunities for marketing services to the right buyer through an appropriate marketing channel at a fair price.

Various measurements are monitored by experts for determining how competitive a property is, in comparison to its compset.

Different kinds of data can be beneficial in improving revenue management, such as current bookings, past occupancy levels, and other key performance statistics.

2. Helps in improvisation of services and guest experience

By using hotel data analytics, you can get information like customers’ feedback about their services and experience at the hotel.

This information is readily available on platforms like social media, reviews made on magazines and hotel websites, or even notes left for the hotel.

However, for reviews, you can also use a traditional survey method which is more detailed, and provides insight into factors that influence guests’ booking decisions.

With this information, hotel owners and managers can know about their property’s strengths and weaknesses. Hoteliers can also improve their services and provide guests better experiences.

By using data analytics for hotels, new perspectives can be generated in the hospitality industry. Hoteliers can discover new and better ways to leverage big data for attracting customers and increasing sales.

3. Data analytics can improve the effectiveness of your marketing

With proper data analysis, the hotel industry can improvise and make its marketing more effective, by knowing exactly what to market to potential customers.

This enables advertisers to build more unique segments that may assist in identifying key consumer groups who frequently visit hotels or other relevant locations.

If a guest books the property for the whole family, then with the help of data analytics, one can market the family activities available in the hotel.

If one usually comes for business, they will showcase activities related to business, which will effectively influence them to come to the hotel. Also, you can market to a specific demographic to beat your competition through target marketing.

4. Helps to scout the business environment and competition

To stay ahead in the industry, hotels must keep an eye out for the competition, and there is no better way of doing so than with the use of data analytics.

Competitor rates can be determined using real-time data analytics that compares your hotel’s current pricing strategy to your compset.

This assists in determining the right price for each room using competitive pricing that operates 24/7, resulting in increased hotel bookings.

The data collected can help you figure out what others in the hotel industry are doing, and how to become better than them in terms of services and experience.

5. Aids in providing additional services

Hotels communicate with existing and potential guests in a number of ways, allowing them to collect large amounts of data. When data is properly gathered and analyzed, it will show a great deal about not just the programs that guests use, but also the services that they avail.

In addition to this, hotel owners are able to decide on new products and services to introduce. If guests often request gym equipment that the hotel is lacking, this can assist them to refurbish their gym.

Data analytics can also help in making decisions about forming partnerships with other companies, such as taxi companies, pubs, restaurants, and travel agencies.

The use of data analytics in the hotel industry is essential for increasing productivity, efficiency, and profitability. The outcomes of data analysis informs a business where they can optimize, whether operations need improvement, which activities can gain higher efficiency, and more.

6. Can help in using social media platforms to your advantage

Social media platforms are the most powerful tools for engaging and connecting with the audience from all over the world.

These media tools are important, especially if you want to have better communication with your guests and remain ahead of the competition.

It’s feasible for guests to interact with the hotel in new ways because of social media. Nowadays, they are using it for requests, needs, opinions, or concerns. Whereas, hoteliers may use these platforms to offer useful information to their guests about their services.

On the other hand, being active on social media also means that customers may use it to express their dissatisfaction over the services they received.

Types of data analysis reports your hotel needs

Effective analytics can aid in the development of intelligent marketing and logistics strategies, as well as the identification of target audience. This approach has shown to be quite significant in the hotel business.

Guests’ sentiments must be carefully monitored by hospitality services at all times. Here are some of the most important hotel reports to keep an eye on.

1. Identification of guests

For better and more successful service delivery and promotion, the hotel business must first identify and understand its guests. They are more likely to share a positive feedback for a hotel that tends to their needs and preferences.

This technique of identifying target audience considers certain criteria, such as age, family background, income, hobbies, and previous purchases.

The hotel industry can use this technology to serve current guests by personalizing services to their specific requirements, as well as target new customers.

2. Report on transactions

By analyzing your daily transactions, you could learn more about what works best for your hotel. You could observe a pattern and figure out which days are more productive and which are not.

You can include payment methods that are more preferred for your hotel. Here are some factors to be considered while creating the transaction report:

- The total number of check-ins

- The total number of check-outs

- The number of unpaid check-outs

- Occupied and unoccupied rooms on average

- Method of payment

3. Forecasting

Every corporation develops a sales forecast for strategic planning, by using customer data, and conducting regular reporting. Such predictive analytics have proven to be useful tools in the data arsenal.

Forecasting is used to estimate future demand for products and services, and further sales. It also assists businesses in operating more efficiently by managing money.

The use of analytics to forecast consumer behavior, improve inventory, and product availability is known as revenue management. The objective is to provide good products to the consumers at the right time.

4. Report on statistics

Small hotel businesses must be able to measure specific indicators that provide them with an insight into how well the business is performing.

Here are the important factors that you need to consider for analysis:

- The total number of nights spent (Closed, occupied, and vacant rooms)

- Canceled reservations

- The average occupancy

- Length of stay

- Lead time

- Revenue per booking

- Daily pricing

Conclusion

Lately, hoteliers have realized that using data analytics to implement Business Intelligence (BI) can improve their services, enhance marketing strategies, and even gain more in their line.

It can also be used to uncover perspectives that contribute to strategic business decisions. With data, you will find key answers that can facilitate product growth, better decision making, optimized offerings, and decreased costs.

You can access this data from the internet, through guests’ reviews, travel guides, and even on various social media platforms.

Data is the new oil and the ones making the best out of it are getting an edge over the competition. So, what are you waiting for? Start using hotel data analytics today.

Saturday, 11 June 2022

Web Crawling in Python

This excerpt has been exactly copied from Machine Learning Mastery, just for self education purposes. Copyrights belong to them.

Web Crawling in Python

In the old days, it was a tedious job to collect data, and it was sometimes very expensive. Machine learning projects cannot live without data. Luckily, we have a lot of data on the web at our disposal nowadays. We can copy data from the web to create our dataset. We can manually download files and save them to the disk. But we can do it more efficiently by automating the data harvesting. There are several tools in Python that can help the automation.

After finishing this tutorial, you will learn:

- How to use the requests library to read online data using HTTP

- How to read tables on web pages using pandas

- How to use Selenium to emulate browser operations

Let’s get started!

Overview

This tutorial is divided into three parts; they are:

- Using the requests library

- Reading tables on the web using pandas

- Reading dynamic content with Selenium

Using the Requests Library

When we talk about writing a Python program to read from the web, it is inevitable that we can’t avoid the requests library. You need to install it (as well as BeautifulSoup and lxml that we will cover later):

1 | pip install requests beautifulsoup4 lxml |

It provides you with an interface that allows you to interact with the web easily.

The very simple use case would be to read a web page from a URL:

1 2 3 4 5 6 7 | import requests # Lat-Lon of New York URL = "https://weather.com/weather/today/l/40.75,-73.98" resp = requests.get(URL) print(resp.status_code) print(resp.text) |

1 2 3 4 5 | 200 <!doctype html><html dir="ltr" lang="en-US"><head> <meta data-react-helmet="true" charset="utf-8"/><meta data-react-helmet="true" name="viewport" content="width=device-width, initial-scale=1, viewport-fit=cover"/> ... |

If you’re familiar with HTTP, you can probably recall that a status code of 200 means the request is successfully fulfilled. Then we can read the response. In the above, we read the textual response and get the HTML of the web page. Should it be a CSV or some other textual data, we can get them in the text attribute of the response object. For example, this is how we can read a CSV from the Federal Reserve Economics Data:

1 2 3 4 5 6 7 8 9 10 11 | import io import pandas as pd import requests URL = "https://fred.stlouisfed.org/graph/fredgraph.csv?id=T10YIE&cosd=2017-04-14&coed=2022-04-14" resp = requests.get(URL) if resp.status_code == 200: csvtext = resp.text csvbuffer = io.StringIO(csvtext) df = pd.read_csv(csvbuffer) print(df) |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | DATE T10YIE 0 2017-04-17 1.88 1 2017-04-18 1.85 2 2017-04-19 1.85 3 2017-04-20 1.85 4 2017-04-21 1.84 ... ... ... 1299 2022-04-08 2.87 1300 2022-04-11 2.91 1301 2022-04-12 2.86 1302 2022-04-13 2.8 1303 2022-04-14 2.89 [1304 rows x 2 columns] |

If the data is in the form of JSON, we can read it as text or even let requests decode it for you. For example, the following is to pull some data from GitHub in JSON format and convert it into a Python dictionary:

1 2 3 4 5 6 7 | import requests URL = "https://api.github.com/users/jbrownlee" resp = requests.get(URL) if resp.status_code == 200: data = resp.json() print(data) |

1 2 3 4 5 6 7 8 9 10 11 | {'login': 'jbrownlee', 'id': 12891, 'node_id': 'MDQ6VXNlcjEyODkx', 'avatar_url': 'https://avatars.githubusercontent.com/u/12891?v=4', 'gravatar_id': '', 'url': 'https://api.github.com/users/jbrownlee', 'html_url': 'https://github.com/jbrownlee', ... 'company': 'Machine Learning Mastery', 'blog': 'http://MachineLearningMastery.com', 'location': None, 'email': None, 'hireable': None, 'bio': 'Making developers awesome at machine learning.', 'twitter_username': None, 'public_repos': 5, 'public_gists': 0, 'followers': 1752, 'following': 0, 'created_at': '2008-06-07T02:20:58Z', 'updated_at': '2022-02-22T19:56:27Z' } |

But if the URL gives you some binary data, such as a ZIP file or a JPEG image, you need to get them in the content attribute instead, as this would be the binary data. For example, this is how we can download an image (the logo of Wikipedia):

1 2 3 4 5 6 7 | import requests URL = "https://en.wikipedia.org/static/images/project-logos/enwiki.png" wikilogo = requests.get(URL) if wikilogo.status_code == 200: with open("enwiki.png", "wb") as fp: fp.write(wikilogo.content) |

Given we already obtained the web page, how should we extract the data? This is beyond what the requests library can provide to us, but we can use a different library to help. There are two ways we can do it, depending on how we want to specify the data.

The first way is to consider the HTML as a kind of XML document and use the XPath language to extract the element. In this case, we can make use of the lxml library to first create a document object model (DOM) and then search by XPath:

1 2 3 4 5 6 7 8 | ... from lxml import etree # Create DOM from HTML text dom = etree.HTML(resp.text) # Search for the temperature element and get the content elements = dom.xpath("//span[@data-testid='TemperatureValue' and contains(@class,'CurrentConditions')]") print(elements[0].text) |

1 | 61° |

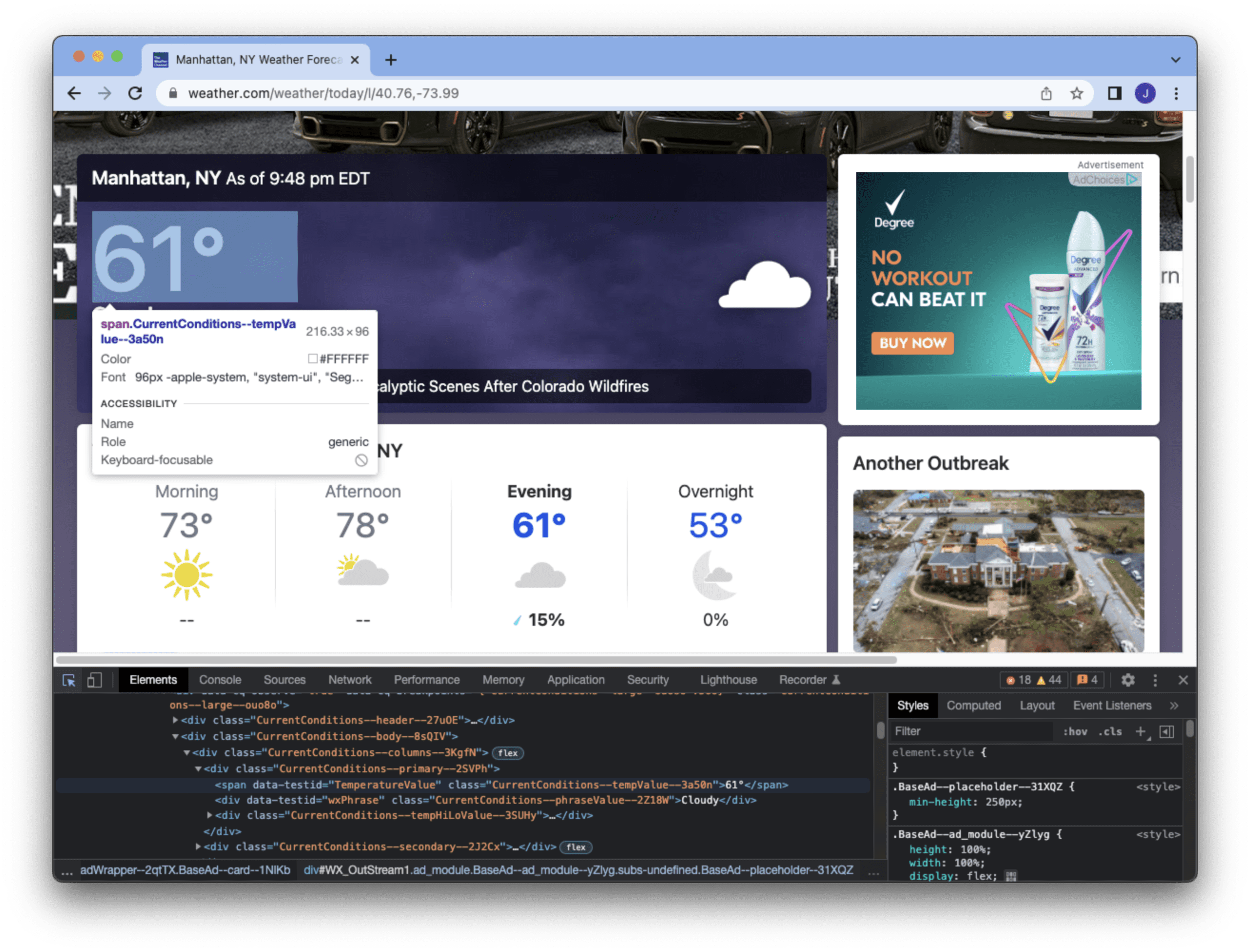

XPath is a string that specifies how to find an element. The lxml object provides a function xpath() to search the DOM for elements that match the XPath string, which can be multiple matches. The XPath above means to find an HTML element anywhere with the <span> tag and with the attribute data-testid matching “TemperatureValue” and class beginning with “CurrentConditions.” We can learn this from the developer tools of the browser (e.g., the Chrome screenshot below) by inspecting the HTML source.

This example is to find the temperature of New York City, provided by this particular element we get from this web page. We know the first element matched by the XPath is what we need, and we can read the text inside the <span> tag.

The other way is to use CSS selectors on the HTML document, which we can make use of the BeautifulSoup library:

1 2 3 4 5 6 | ... from bs4 import BeautifulSoup soup = BeautifulSoup(resp.text, "lxml") elements = soup.select('span[data-testid="TemperatureValue"][class^="CurrentConditions"]') print(elements[0].text) |

1 | 61° |

In the above, we first pass our HTML text to BeautifulSoup. BeautifulSoup supports various HTML parsers, each with different capabilities. In the above, we use the lxml library as the parser as recommended by BeautifulSoup (and it is also often the fastest). CSS selector is a different mini-language, with pros and cons compared to XPath. The selector above is identical to the XPath we used in the previous example. Therefore, we can get the same temperature from the first matched element.

The following is a complete code to print the current temperature of New York according to the real-time information on the web:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | import requests from lxml import etree # Reading temperature of New York URL = "https://weather.com/weather/today/l/40.75,-73.98" resp = requests.get(URL) if resp.status_code == 200: # Using lxml dom = etree.HTML(resp.text) elements = dom.xpath("//span[@data-testid='TemperatureValue' and contains(@class,'CurrentConditions')]") print(elements[0].text) # Using BeautifulSoup soup = BeautifulSoup(resp.text, "lxml") elements = soup.select('span[data-testid="TemperatureValue"][class^="CurrentConditions"]') print(elements[0].text) |

As you can imagine, you can collect a time series of the temperature by running this script on a regular schedule. Similarly, we can collect data automatically from various websites. This is how we can obtain data for our machine learning projects.

Reading Tables on the Web Using Pandas

Very often, web pages will use tables to carry data. If the page is simple enough, we may even skip inspecting it to find out the XPath or CSS selector and use pandas to get all tables on the page in one shot. It is simple enough to be done in one line:

1 2 3 4 | import pandas as pd tables = pd.read_html("https://www.federalreserve.gov/releases/h15/") print(tables) |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 | [ Instruments 2022Apr7 2022Apr8 2022Apr11 2022Apr12 2022Apr13 0 Federal funds (effective) 1 2 3 0.33 0.33 0.33 0.33 0.33 1 Commercial Paper 3 4 5 6 NaN NaN NaN NaN NaN 2 Nonfinancial NaN NaN NaN NaN NaN 3 1-month 0.30 0.34 0.36 0.39 0.39 4 2-month n.a. 0.48 n.a. n.a. n.a. 5 3-month n.a. n.a. n.a. 0.78 0.78 6 Financial NaN NaN NaN NaN NaN 7 1-month 0.49 0.45 0.46 0.39 0.46 8 2-month n.a. n.a. 0.60 0.71 n.a. 9 3-month 0.85 0.81 0.75 n.a. 0.86 10 Bank prime loan 2 3 7 3.50 3.50 3.50 3.50 3.50 11 Discount window primary credit 2 8 0.50 0.50 0.50 0.50 0.50 12 U.S. government securities NaN NaN NaN NaN NaN 13 Treasury bills (secondary market) 3 4 NaN NaN NaN NaN NaN 14 4-week 0.21 0.20 0.21 0.19 0.23 15 3-month 0.68 0.69 0.78 0.74 0.75 16 6-month 1.12 1.16 1.22 1.18 1.17 17 1-year 1.69 1.72 1.75 1.67 1.67 18 Treasury constant maturities NaN NaN NaN NaN NaN 19 Nominal 9 NaN NaN NaN NaN NaN 20 1-month 0.21 0.20 0.22 0.21 0.26 21 3-month 0.68 0.70 0.77 0.74 0.75 22 6-month 1.15 1.19 1.23 1.20 1.20 23 1-year 1.78 1.81 1.85 1.77 1.78 24 2-year 2.47 2.53 2.50 2.39 2.37 25 3-year 2.66 2.73 2.73 2.58 2.57 26 5-year 2.70 2.76 2.79 2.66 2.66 27 7-year 2.73 2.79 2.84 2.73 2.71 28 10-year 2.66 2.72 2.79 2.72 2.70 29 20-year 2.87 2.94 3.02 2.99 2.97 30 30-year 2.69 2.76 2.84 2.82 2.81 31 Inflation indexed 10 NaN NaN NaN NaN NaN 32 5-year -0.56 -0.57 -0.58 -0.65 -0.59 33 7-year -0.34 -0.33 -0.32 -0.36 -0.31 34 10-year -0.16 -0.15 -0.12 -0.14 -0.10 35 20-year 0.09 0.11 0.15 0.15 0.18 36 30-year 0.21 0.23 0.27 0.28 0.30 37 Inflation-indexed long-term average 11 0.23 0.26 0.30 0.30 0.33, 0 1 0 n.a. Not available.] |

The read_html() function in pandas reads a URL and finds all the tables on the page. Each table is converted into a pandas DataFrame and then returns all of them in a list. In this example, we are reading the various interest rates from the Federal Reserve, which happens to have only one table on this page. The table columns are identified by pandas automatically.

Chances are that not all tables are what we are interested in. Sometimes, the web page will use a table merely as a way to format the page, but pandas may not be smart enough to tell. Hence we need to test and cherry-pick the result returned by the read_html() function.

Reading Dynamic Content With Selenium

A significant portion of modern-day web pages is full of JavaScripts. This gives us a fancier experience but becomes a hurdle to use as a program to extract data. One example is Yahoo’s home page, which, if we just load the page and find all news headlines, there are far fewer than what we can see on the browser:

1 2 3 4 5 6 7 8 9 10 11 | import requests # Read Yahoo home page URL = "https://www.yahoo.com/" resp = requests.get(URL) dom = etree.HTML(resp.text) # Print news headlines elements = dom.xpath("//h3/a[u[@class='StretchedBox']]") for elem in elements: print(etree.tostring(elem, method="text", encoding="unicode")) |

This is because web pages like this rely on JavaScript to populate the content. Famous web frameworks such as AngularJS or React are behind powering this category. The Python library, such as requests, does not understand JavaScript. Therefore, you will see the result differently. If the data you want to fetch from the web is one of them, you can study how the JavaScript is invoked and mimic the browser’s behavior in your program. But this is probably too tedious to make it work.

The other way is to ask a real browser to read the web page rather than using requests. This is what Selenium can do. Before we can use it, we need to install the library:

1 | pip install selenium |

But Selenium is only a framework to control browsers. You need to have the browser installed on your computer as well as the driver to connect Selenium to the browser. If you intend to use Chrome, you need to download and install ChromeDriver too. You need to put the driver in the executable path so that Selenium can invoke it like a normal command. For example, in Linux, you just need to get the chromedriver executable from the ZIP file downloaded and put it in /usr/local/bin.

Similarly, if you’re using Firefox, you need the GeckoDriver. For more details on setting up Selenium, you should refer to its documentation.

Afterward, you can use a Python script to control the browser behavior. For example:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 | import time from selenium import webdriver from selenium.webdriver.support.ui import WebDriverWait from selenium.webdriver.common.by import By # Launch Chrome browser in headless mode options = webdriver.ChromeOptions() options.add_argument("headless") browser = webdriver.Chrome(options=options) # Load web page browser.get("https://www.yahoo.com") # Network transport takes time. Wait until the page is fully loaded def is_ready(browser): return browser.execute_script(r""" return document.readyState === 'complete' """) WebDriverWait(browser, 30).until(is_ready) # Scroll to bottom of the page to trigger JavaScript action browser.execute_script("window.scrollTo(0, document.body.scrollHeight);") time.sleep(1) WebDriverWait(browser, 30).until(is_ready) # Search for news headlines and print elements = browser.find_elements(By.XPATH, "//h3/a[u[@class='StretchedBox']]") for elem in elements: print(elem.text) # Close the browser once finish browser.close() |

The above code works as follows. We first launch the browser in headless mode, meaning we ask Chrome to start but not display on the screen. This is important if we want to run our script remotely as there may not be any GUI support. Note that every browser is developed differently, and thus the options syntax we used is specific to Chrome. If we use Firefox, the code would be this instead:

1 2 3 | options = webdriver.FirefoxOptions() options.set_headless() browser = webdriver.Firefox(firefox_options=options) |

After we launch the browser, we give it a URL to load. But since it takes time for the network to deliver the page, and the browser will take time to render it, we should wait until the browser is ready before we proceed to the next operation. We detect if the browser has finished rendering by using JavaScript. We make Selenium run a JavaScript code for us and tell us the result using the execute_script() function. We leverage Selenium’s WebDriverWait tool to run it until it succeeds or until a 30-second timeout. As the page is loaded, we scroll to the bottom of the page so the JavaScript can be triggered to load more content. Then we wait for one second unconditionally to make sure the browser triggered the JavaScript, then wait until the page is ready again. Afterward, we can extract the news headline element using XPath (or alternatively using a CSS selector). Because the browser is an external program, we are responsible for closing it in our script.

Using Selenium is different from using the requests library in several aspects. First, you never have the web content in your Python code directly. Instead, you refer to the browser’s content whenever you need it. Hence the web elements returned by the find_elements() function refer to objects inside the external browser, so we must not close the browser before we finish consuming them. Secondly, all operations should be based on browser interaction rather than network requests. Thus you need to control the browser by emulating keyboard and mouse movements. But in return, you have the full-featured browser with JavaScript support. For example, you can use JavaScript to check the size and position of an element on the page, which you will know only after the HTML elements are rendered.

There are a lot more functions provided by the Selenium framework that we can cover here. It is powerful, but since it is connected to the browser, using it is more demanding than the requests library and much slower. Usually, this is the last resort for harvesting information from the web.

Further Reading

Another famous web crawling library in Python that we didn’t cover above is Scrapy. It is like combining the requests library with BeautifulSoup into one. The web protocol is complex. Sometimes we need to manage web cookies or provide extra data to the requests using the POST method. All these can be done with the requests library with a different function or extra arguments. The following are some resources for you to go deeper:

Articles

- An overview of HTTP from MDN

- XPath from MDN

- XPath tutorial from W3Schools

- CSS Selector Reference from W3Schools

- Selenium Python binding

API documentation

Books

- Python Web Scraping, 2nd Edition, by Katharine Jarmul and Richard Lawson

- Web Scraping with Python, 2nd Edition, by Ryan Mitchell

- Learning Scrapy, by Dimitrios Kouzis-Loukas

- Python Testing with Selenium, by Sujay Raghavendra

- Hands-On Web Scraping with Python, by Anish Chapagain

Summary

In this tutorial, you saw the tools we can use to fetch content from the web.

Specifically, you learned:

- How to use the requests library to send the HTTP request and extract data from its response

- How to build a document object model from HTML so we can find some specific information on a web page

- How to read tables on a web page quickly and easily using pandas

- How to use Selenium to control a browser to tackle dynamic content on a web page